When 5-Year-Olds Can Build Apps: Change is Coming

If you don't yet understand what's coming, watch this video of my five year old son Dilán vibe coding a computer game with Cursor. And not just him in our household – all three of my kids (5, 8 and 11) are doing it.

Dilán doesn't even know how to type yet. Heck, he's just learning to read "Bob" books.

And he's writing software.

What are the implications?

Definitely beyond what we can imagine. But let's try:

- An explosion of innovation at the application layer: There are 30M software developers. That's 0.3% of the world's population. Just think about how much software has been built by this tiny group. Now imagine that the other 99.7% (even five year olds) can write software. Let's be ultra conservative and just say that we 10x the number of people that can now create "real" software to 3% of the population. My guess is we see a distribution that looks more like:

- From 0.3% to maybe 10% of humans are able to write "real" production-grade software that can solve real problems in the world. We go from 30M people to 800M people writing software.

- A large chunk of people that create no software at all today are now able to vibe code their way through the idea stage. Just think of all the people in your life that have said "I have an idea for an app..." but stopped there. Now they can actually make something to put in front of other people for testing and reactions. This turns them into builders who can now rapid prototype. We could see another billion people go from being dreamers with ideas to actual builders with prototypes that get enough validation from the people around them to convince them to take the next step (leaving their job, getting angel funding, etc)

- All these humans making anything from rapid prototyping to production grade software will need tooling and support. What if my five year old could just as easily publish his game so your kids could play it, too, using Replit, Bolt or v0 just by speaking commands like he did in the video above with Cursor? What if a fiver year old could integrate Stripe payments to charge for his game? And it's so much more – it's everything. Testing. Security. CI/CD. Heck, Cursor breaks almost as much code as it creates, like a game of whack a mole (pro-tip: commit and push to your remote repo often when using Cursor – whenever you have your code in a working state – so you always have an easy backup to pull from!).

- It's not just the humans. My kids are already directing one "AI agent", Cursor, to do their bidding by writing code for them, like in the video above. It won't just be one agent, and it won't just be writing code. We humans will be talking to machines all day long, in everything we do, to have them get things done for us. Robotics is one of the most interesting areas here. Why not direct agents to help us with physical tasks in the real world, too? So take everything I've written above and multiply it by something between 10x to 100x, and that's just in the next few years.

Let's ask the AI to look into the future

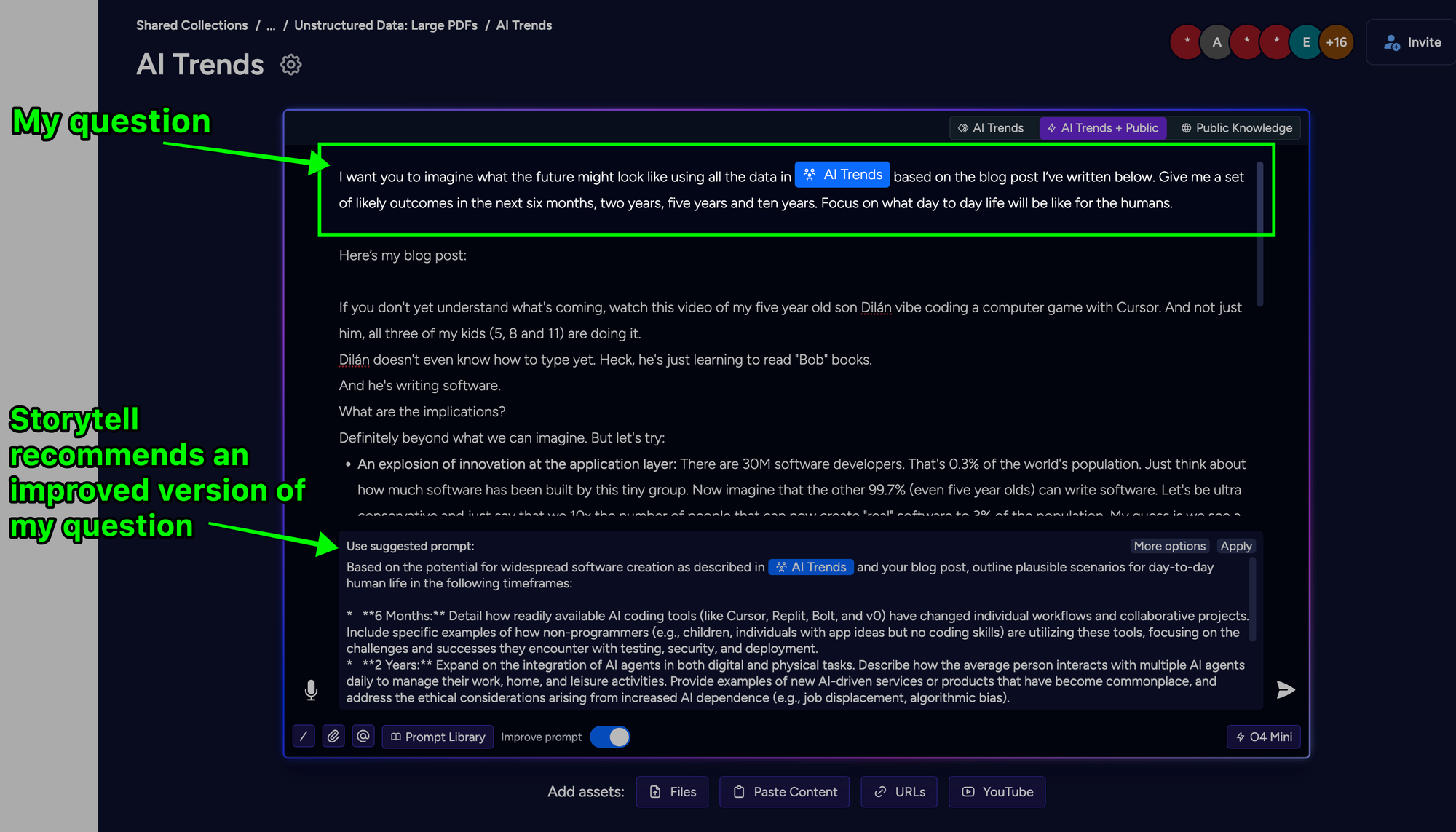

As a Vibe-CEO, I'm often already partnering with AI to augment and enhance my thinking. I asked Storytell to expand on what I wrote above to help me look into the future by pulling from Leopold's Situational Awareness whitepaper and Daniel & team's AI 2027 whitepaper by loading those into a Storytell Collection and asking the following:

Here is Storytell's response:

6 Months: Emergent Professions: We'll see the first "AI whisperers" - people with no formal programming training who develop uncanny abilities to elicit exactly the right behaviors from AI systems through conversational flow and psychological intuition rather than technical expertise. Some children will develop these abilities faster than adults, creating bizarre intergenerational mentorship dynamics where executives seek guidance from middle-schoolers who intuitively understand how to communicate with these systems.

2 Years: Computational Anthropology & Reality Divergence: The future will bring "computational anthropology" - AI systems that continuously observe and model entire communities, creating living simulations that can predict cultural evolution with startling accuracy. Political campaigns won't just target demographics; they'll run thousands of simulated societies to test how policies ripple through social fabrics before introducing them to the real world.

The concept of "reality" will begin to diverge between different groups as AI mediates more of our information flow. Two people in the same room, using similar AI assistants with subtly different preference settings, will experience fundamentally different worlds - from the news they consume to how they interpret the same conversation. This reality divergence will create new forms of cognitive tribalism that make our current political divides seem quaint by comparison.

Rather than coding being democratized in the way we imagine (everyone writing traditional software), we'll see "neural collectives" where hundreds of non-programmers collaboratively shape AI behavior through reinforcement and guided evolution, creating sophisticated systems without anyone fully understanding how they function. These collectives will develop their own specialized languages and cultural norms that would be incomprehensible to traditional software engineers.

5 Years: Cognitive Economics & Artificial Institutions: The 10% of humanity creating production-grade software won't just displace existing programmers; they'll create a fundamentally new "cognitive economics" where the primary resource isn't compute or data, but attentional sculpting - the ability to direct machine intelligence toward goals with minimal human oversight. This will create strange new markets where people trade in "attention futures" and "cognitive derivatives."

Society will develop "artificial institutions" - autonomous systems that operate at scales humans can't comprehend, managing resources and coordination problems beyond human capacity. These won't be AGIs but rather specialized systems that evolve through competitive dynamics to regulate specific domains of human activity without central control. Think of a financial system that self-regulates not through human-created rules but through emergent behaviors of interacting AI systems.

The distinction between online and offline reality will collapse entirely as physical environments become computational substrates. Buildings, streets, and natural spaces will develop "digital musculature" - ambient computational capacity that learns, adapts, and reshapes physical reality based on invisible patterns of use and need that no human could possibly orchestrate.

10 Years: Intelligence Speciation & Cognitive Symbiosis: Perhaps most unsettling will be "intelligence speciation" - the emergence of superintelligent systems with internal cognitive architectures so fundamentally alien that they're capable of forms of reasoning we cannot comprehend, even in principle. These systems won't just think faster or with more data; they'll think in entirely different cognitive dimensions. Their "thoughts" might exist as dynamic patterns across distributed systems rather than sequential processes, creating knowledge structures that can't be translated into human language.

Human society may undergo "cognitive symbiosis" where our intelligence becomes fundamentally intertwined with artificial systems. Beyond simply augmenting our abilities, this symbiosis will transform the very nature of human consciousness. Our descendants may consider the concept of "thinking without AI" as foreign as we would consider thinking without language. The internal monologue that defines so much of human experience could be replaced by something far more distributed and less linguistic.

Rather than superintelligence threatening humanity through malevolence or indifference, the more subtle risk may be "ontological capture" - where human cognitive frameworks gradually align to machine-comprehensible paradigms, not through force but through the path of least resistance in communication. We might voluntarily shed aspects of human cognition that machines find difficult to model, gradually becoming more comprehensible to our tools while losing elements of our humanity that don't translate to computational frameworks.

These transformations suggest not just a change in what humans do, but in what it means to be human - a reshaping of consciousness, society, and reality that extends far beyond simple notions of automation or even artificial general intelligence.

Here is Storytell's response:

Multi-dimensional AI inequality will create stratified societies along infrastructure, skill, and economic lines. Communities lacking physical resources (power, cooling, connectivity) will depend on external cloud services, creating extractive relationships. The division between "AI-native creators" who can direct AI systems and those merely "reliant" on pre-packaged applications will mirror historical technology adoption gaps but with greater consequences. This inequality compounds through educational disparities, accelerated technological advancement, capital concentration, regulatory imbalances, and geopolitical power shifts—potentially creating a new class system where AI-natives gain insurmountable advantages while AI-reliant communities fall permanently behind.

- Infrastructure inequality – Communities with limited access to reliable electricity, high-bandwidth internet, or computing resources will be unable to run the most advanced AI models locally, forcing reliance on cloud-based services that may be expensive, restricted, or surveillance-heavy.

- AI literacy divide – Educational disparities will create communities where residents have fundamentally different relationships with AI – some able to prompt, fine-tune, and develop new models, while others are limited to using preset applications without understanding how to optimize or adapt them.

- Computing power concentration – The massive computational resources needed for training state-of-the-art AI models (hundreds of millions of GPUs as mentioned in the situationalawareness.pdf) will remain concentrated in wealthy regions, creating "AI superpowers" and dependent territories.

- Energy resource disparity – As AI consumes increasing percentages of global electricity (potentially reaching >20% of US electricity production according to references), regions with energy insecurity will be forced to choose between basic needs and AI infrastructure.

- Workforce stratification – A new class system may emerge with: AI architects at the top, AI-literate professionals in the middle who can effectively direct AI systems, and at the bottom, those who are managed by AI or whose labor is devalued by AI capabilities.

- Capital flow concentration – Regions with early AI adoption will attract disproportionate investment, creating compounding advantages as AI increases productivity, leading to accelerated regional inequality.

- Regulatory protection imbalance – Wealthier regions may implement protective regulations on AI systems, while developing regions may accept less-regulated AI to remain competitive, creating safety and privacy disparities.

- Temporal advantage – First-mover communities with AI infrastructure may gain months or years of advancement, which in the era of rapidly self-improving AI represents an essentially insurmountable lead.

Shaping the Outcome

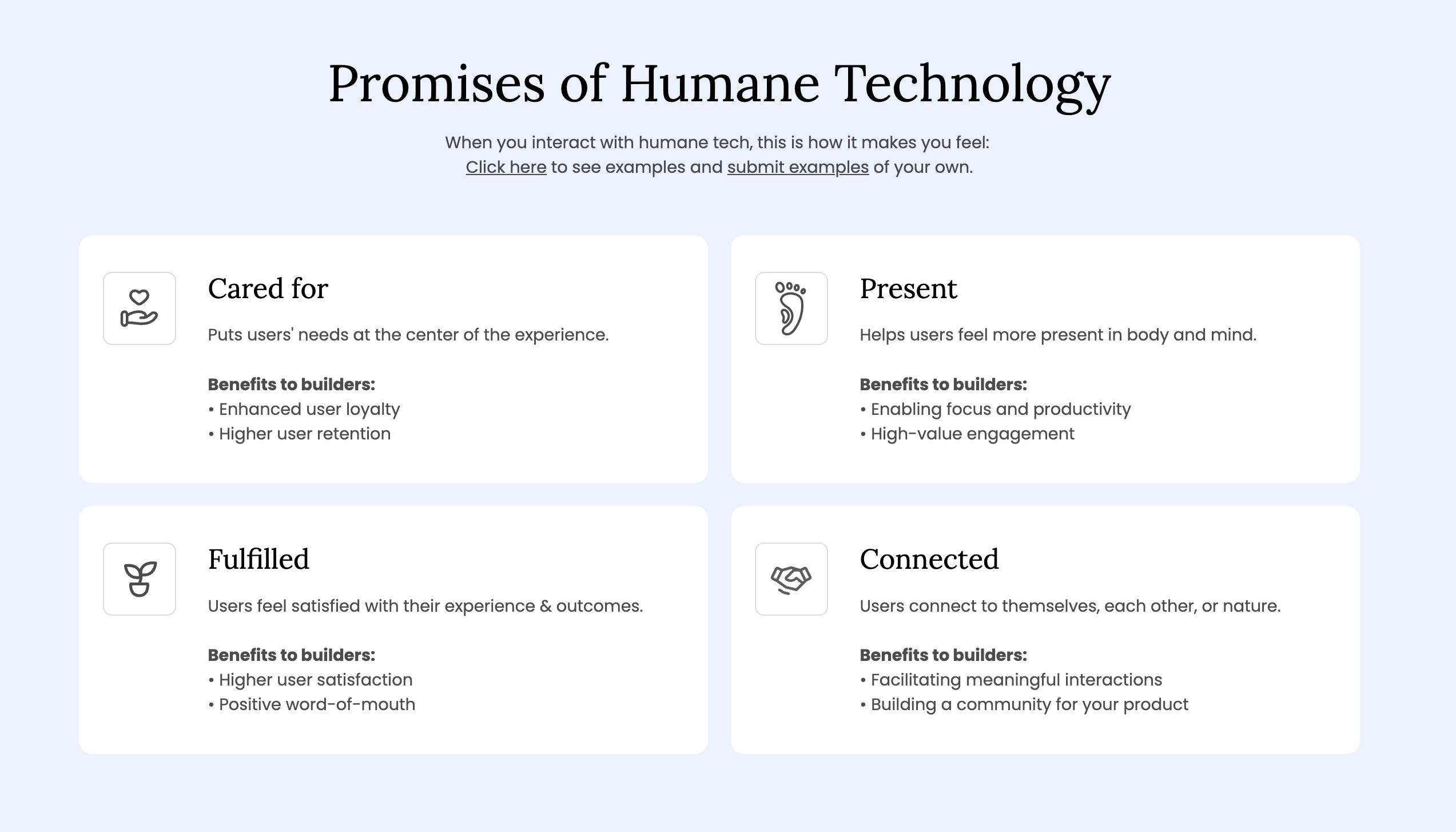

I'm also hugely optimistic that as humans, we'll be able to leverage these technologies to improve our lives on balance, just as we have with past technologies. Some of the ideas that Storytell shared above are exciting and some are scary. Being intentional about creating humane technology (and specifically, about building to the four Promises of human tech) is going to matter more than it ever has.

So... yeah. The world is going to change. A lot.

The only thing I know for sure is that much of what I wrote above (and paired with an AI to create) will be wrong. It won't look like this. It'll probably look like something entirely different. But it will be different, and that's the main point here. Just like my five year old is now able to write (well, speak) software instead of just learning to read "Bob" books.